Abstract

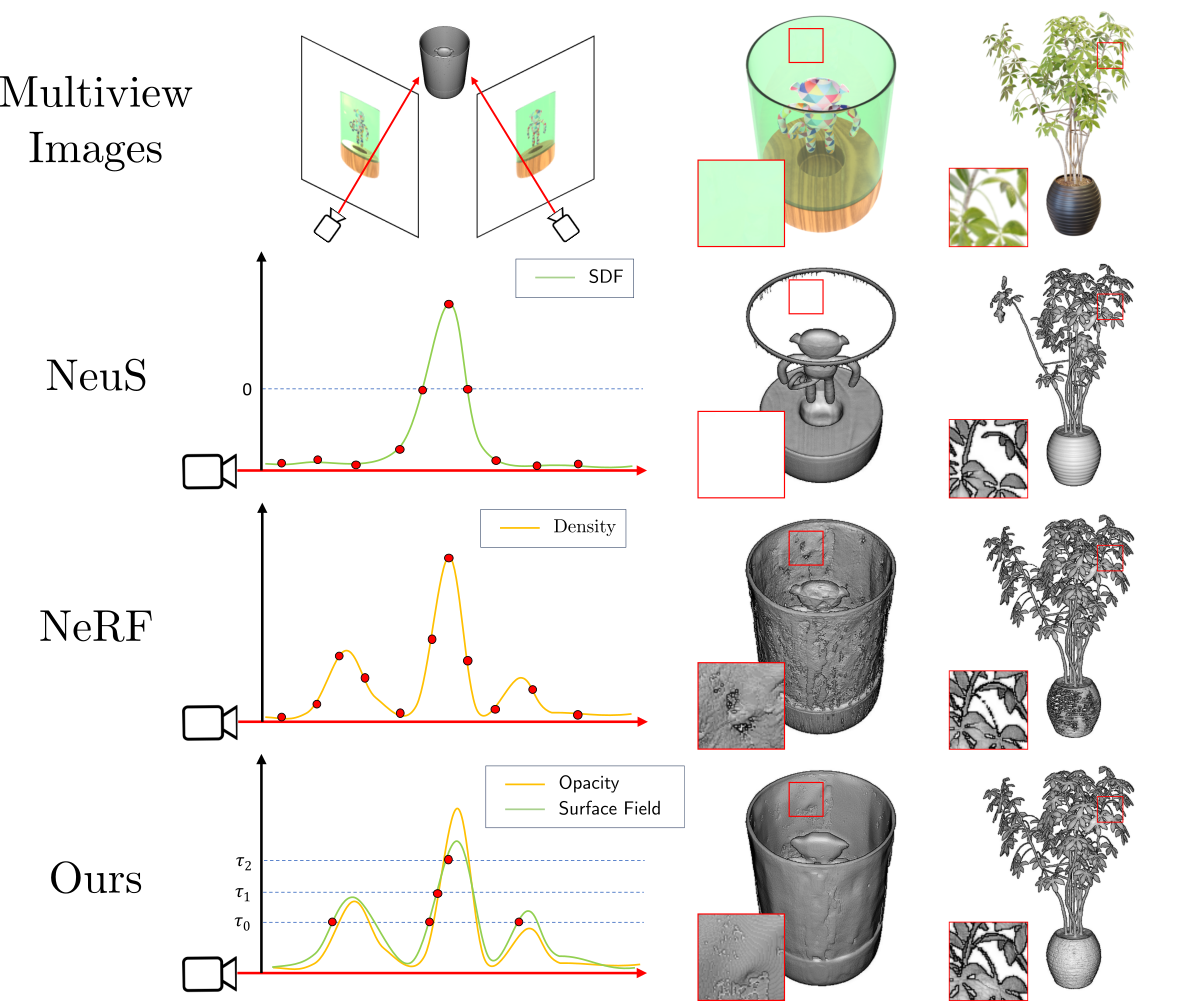

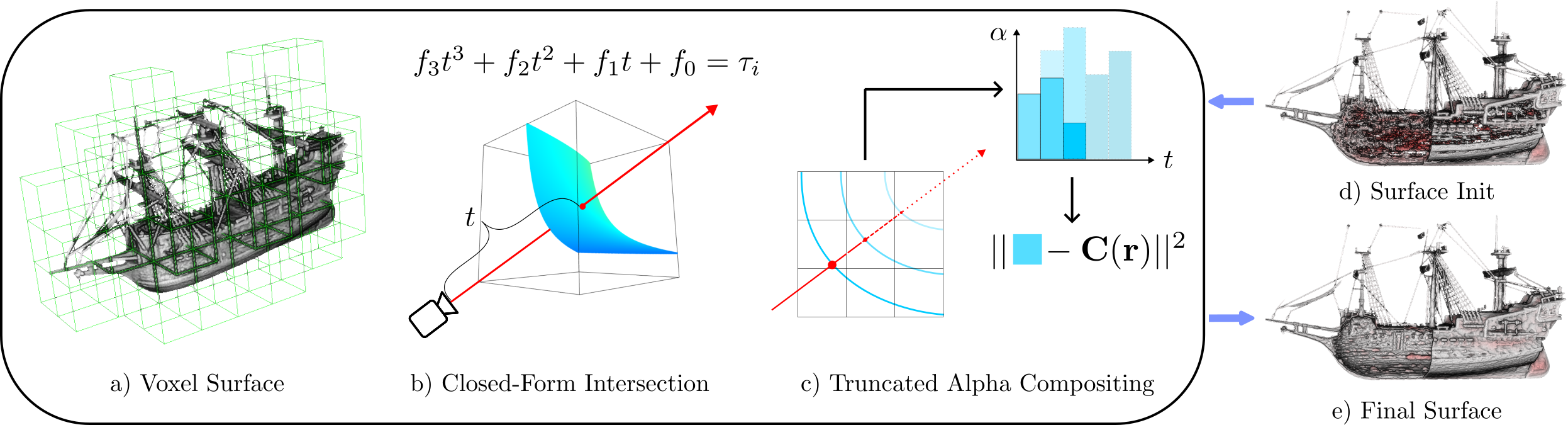

Implicit surface representations such as the signed distance function (SDF) have emerged as a promising approach for image-based surface reconstruction. However, existing optimization methods assume opaque surfaces and therefore cannot properly reconstruct translucent surfaces and sub-pixel thin structures, which also exhibit low opacity due to the blending effect. While neural radiance field (NeRF) based methods can model semi-transparency and synthesize novel views with photo-realistic quality, their volumetric representation tightly couples geometry (surface occupancy) and material property (surface opacity), and therefore cannot be easily converted into surfaces without introducing artifacts. We present αSurf, a novel scene representation with decoupled geometry and opacity for the reconstruction of surfaces with translucent or blending effects. Ray-surface intersections on our representation can be found in closed-form via analytical solutions of cubic polynomials, avoiding Monte-Carlo sampling, and are fully differentiable by construction. Our qualitative and quantitative evaluations show that our approach can accurately reconstruct translucent and extremely thin surfaces, achieving better reconstruction quality than state-of-the-art SDF and NeRF methods.

More results